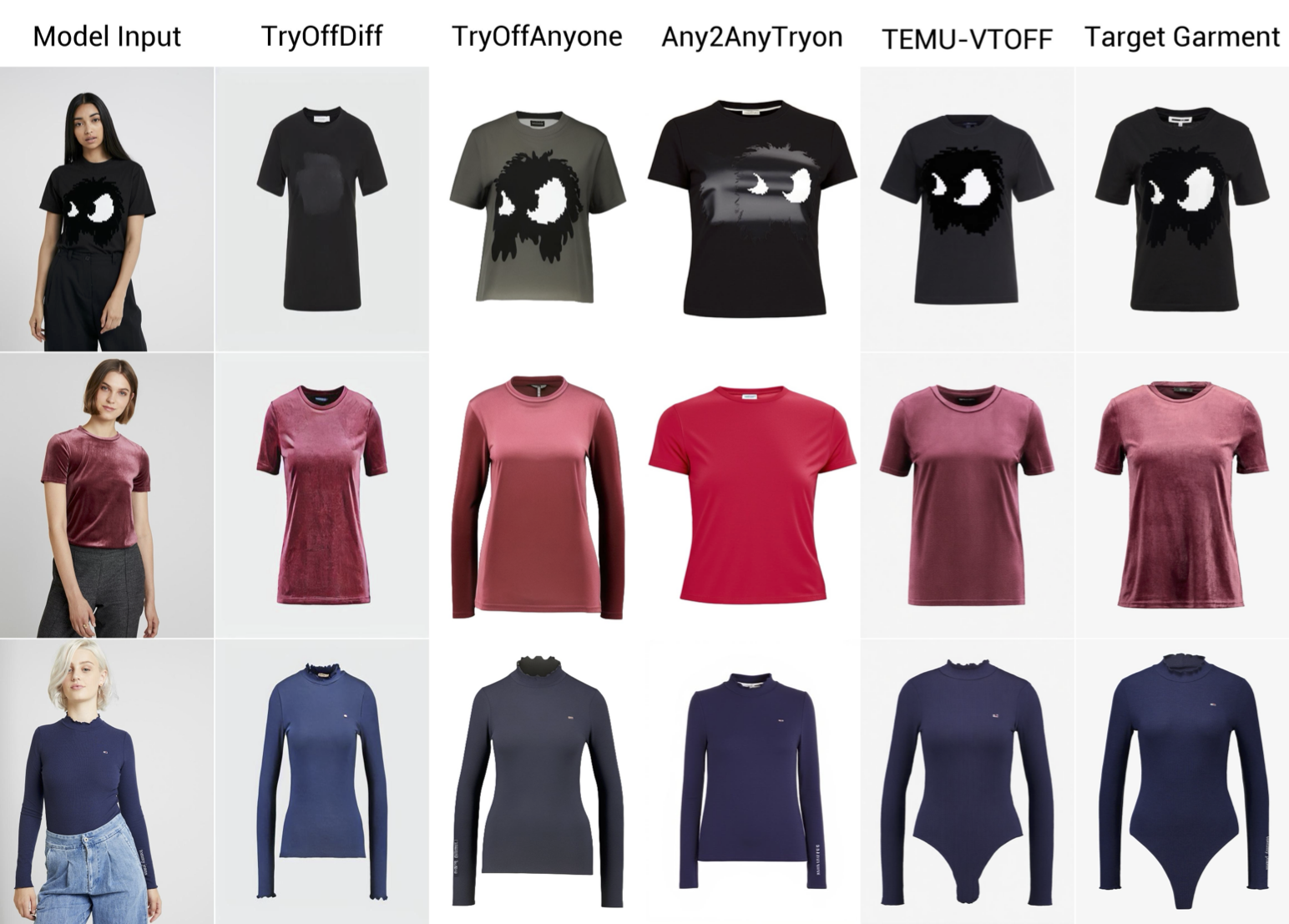

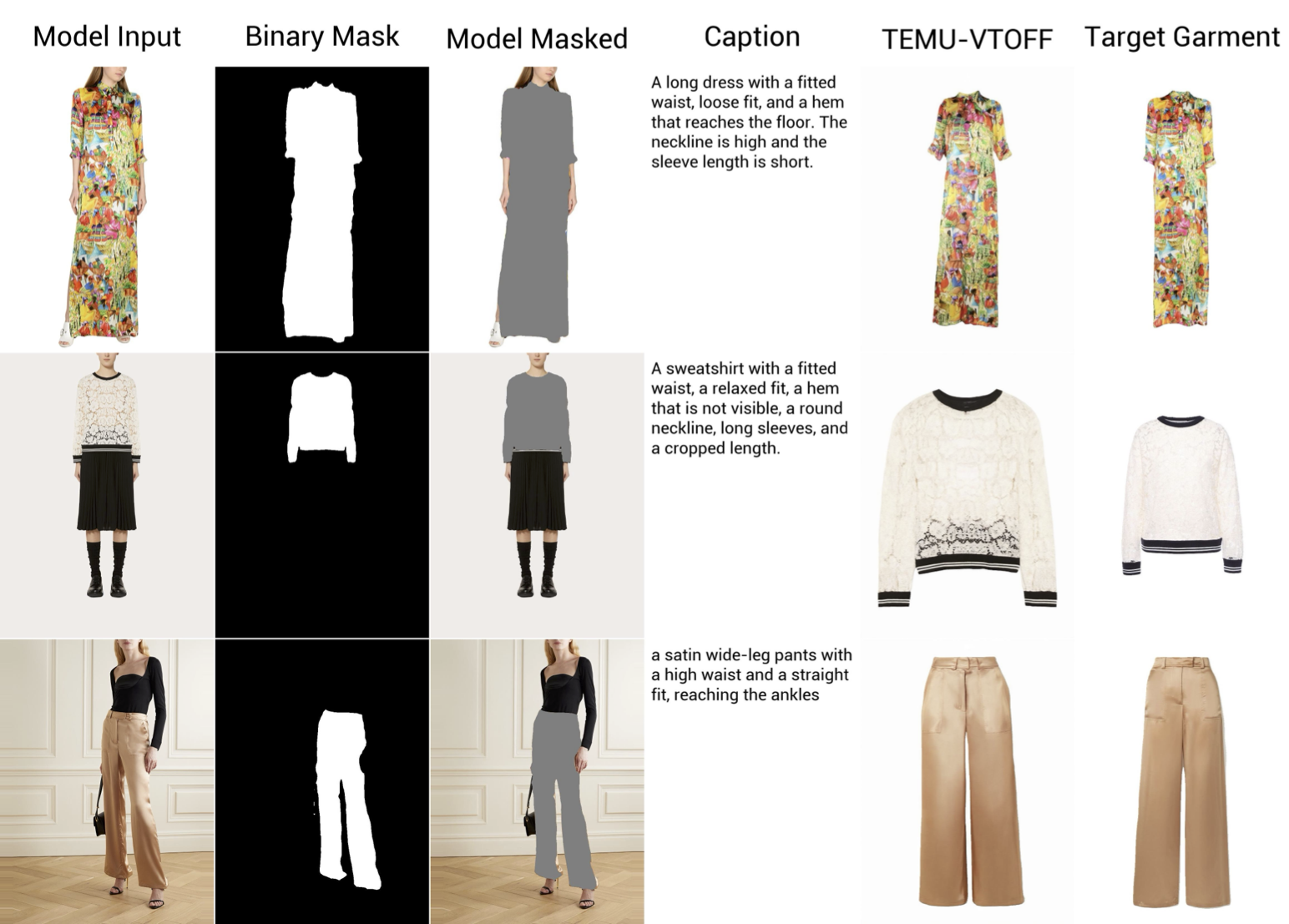

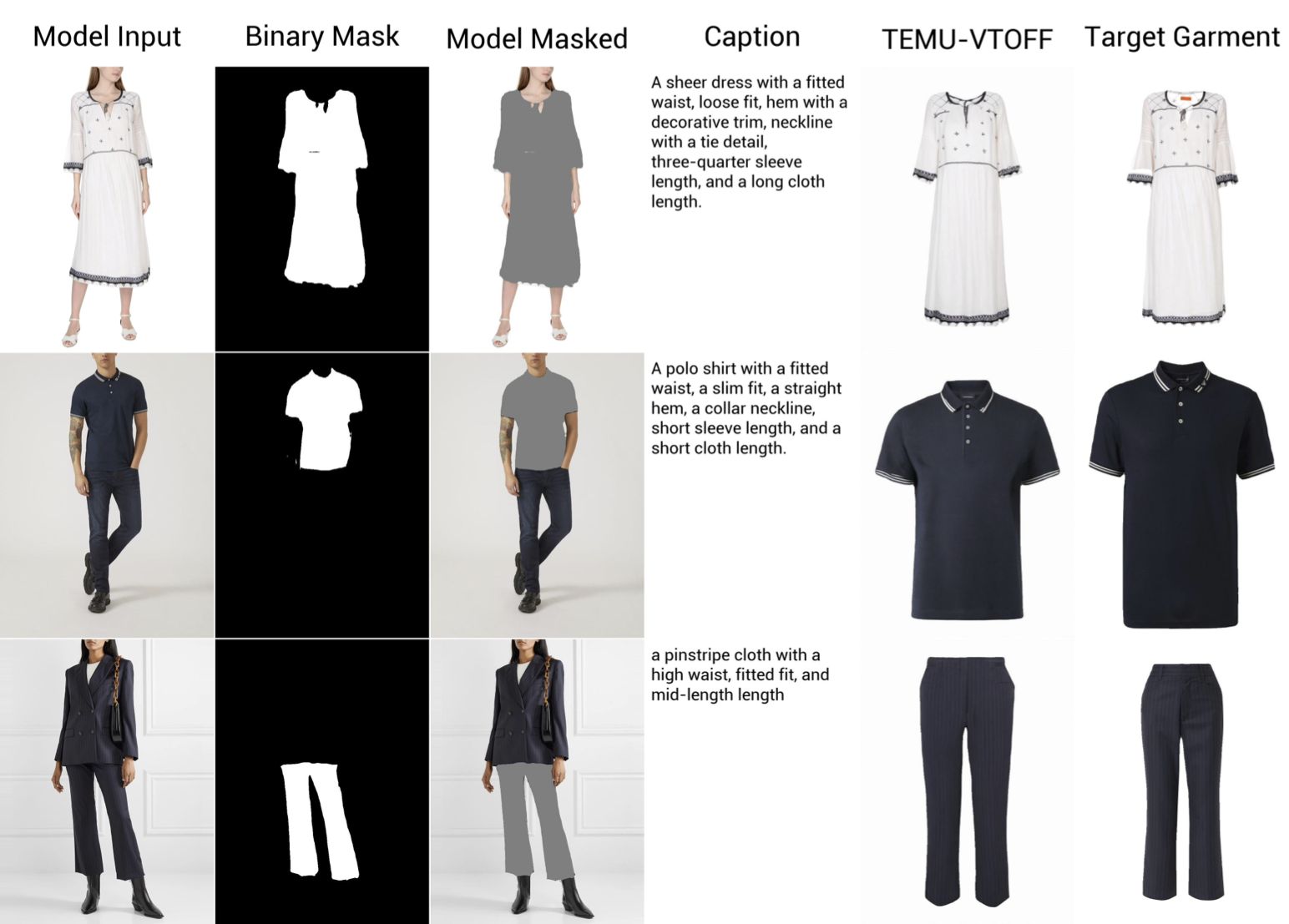

While virtual try-on (VTON) systems aim to render a garment onto a target person image, this paper tackles the novel task of virtual try-off (VTOFF), which addresses the inverse problem: generating standardized product images of garments from real-world photos of clothed individuals. Unlike VTON, which must resolve diverse pose and style variations, VTOFF benefits from a consistent and well-defined output format — typically a flat, lay-down-style representation of the garment — making it a promising tool for data generation and dataset enhancement. However, existing VTOFF approaches face two major limitations: (i) difficulty in disentangling garment features from occlusions and complex poses, often leading to visual artifacts, and (ii) restricted applicability to single-category garments (e.g., upper-body clothes only), limiting generalization. To address these challenges, we present Text-Enhanced MUlti-category Virtual Try-Off (TEMU-VTOFF), a novel architecture featuring a dual DiT-based backbone with a modified multimodal attention mechanism for robust garment feature extraction. Our architecture is designed to receive garment information from multiple modalities like images, text, and masks to work in a multi-category setting. Finally, we propose an additional alignment module to further refine the generated visual details. Experiments on VITON-HD and Dress Code datasets show that TEMU-VTOFF sets a new state-of-the-art on the VTOFF task, significantly improving both visual quality and fidelity to the target garments.

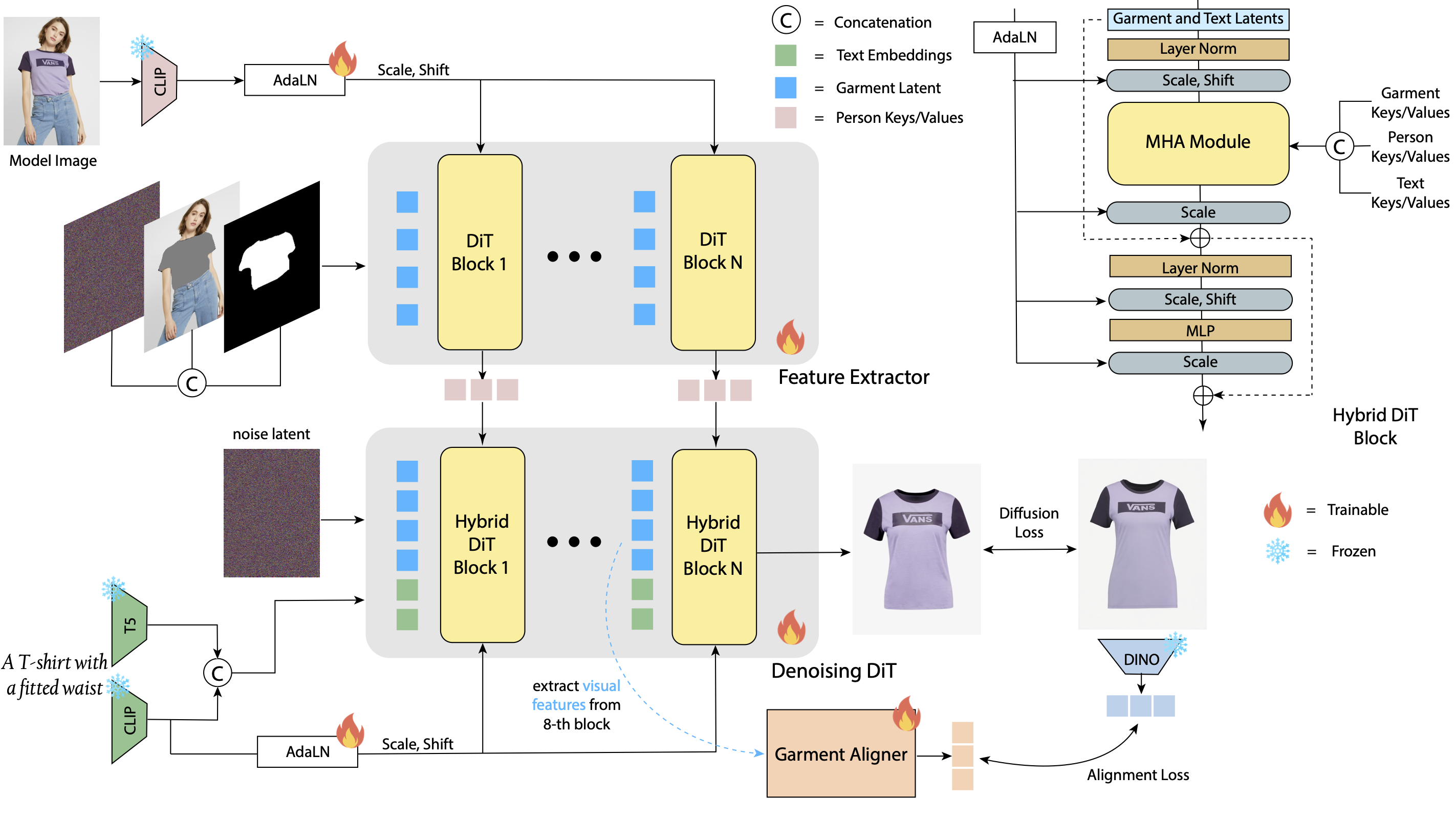

Overall Framework of TEMU-VTOFF. The feature extractor \( F_E \) processes spatial inputs (noise, masked image, binary mask), and global inputs (model image via AdaLN). The intermediate keys and values \( \mathbf{K}^l_{\text{extractor}} \), \( \mathbf{V}^l_{\text{extractor}} \) are injected into the corresponding hybrid blocks of the garment generator \( F_D \). Then, the main DiT model generates the final garment leveraging the proposed MHA module. We align our model with a diffusion loss for the noise estimate and an alignment loss with clean, DINOv2 features of the target garment.

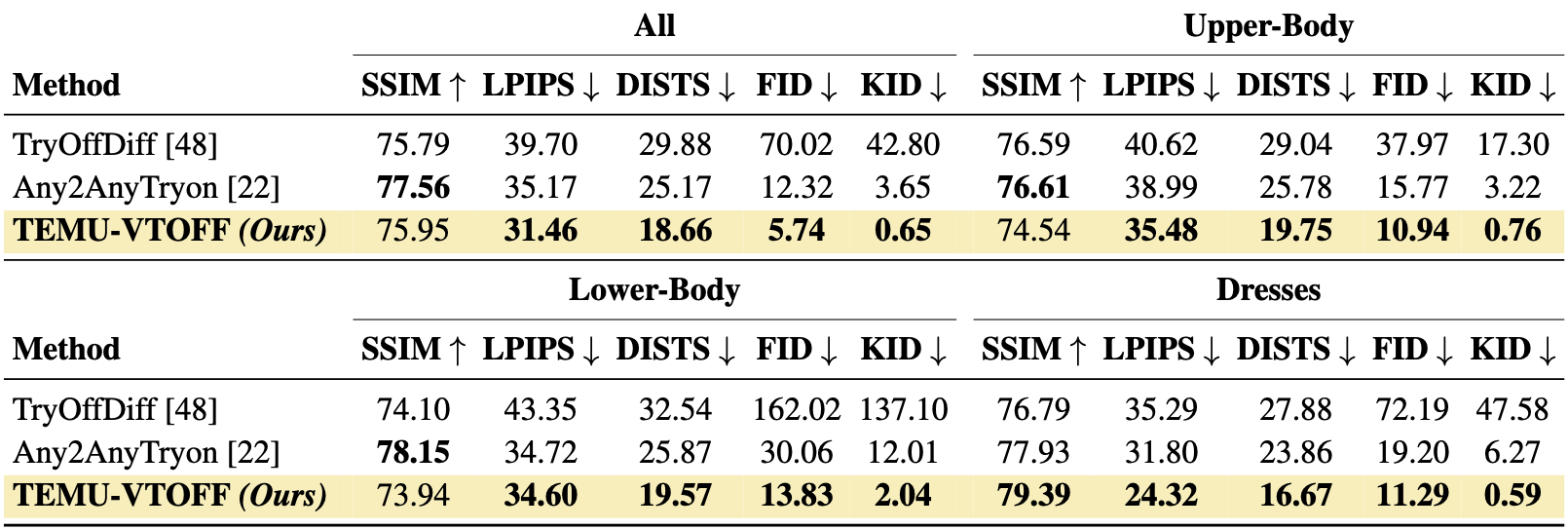

Quantitative results on the Dress Code dataset, considering both the entire test set and the three category-specific subsets.

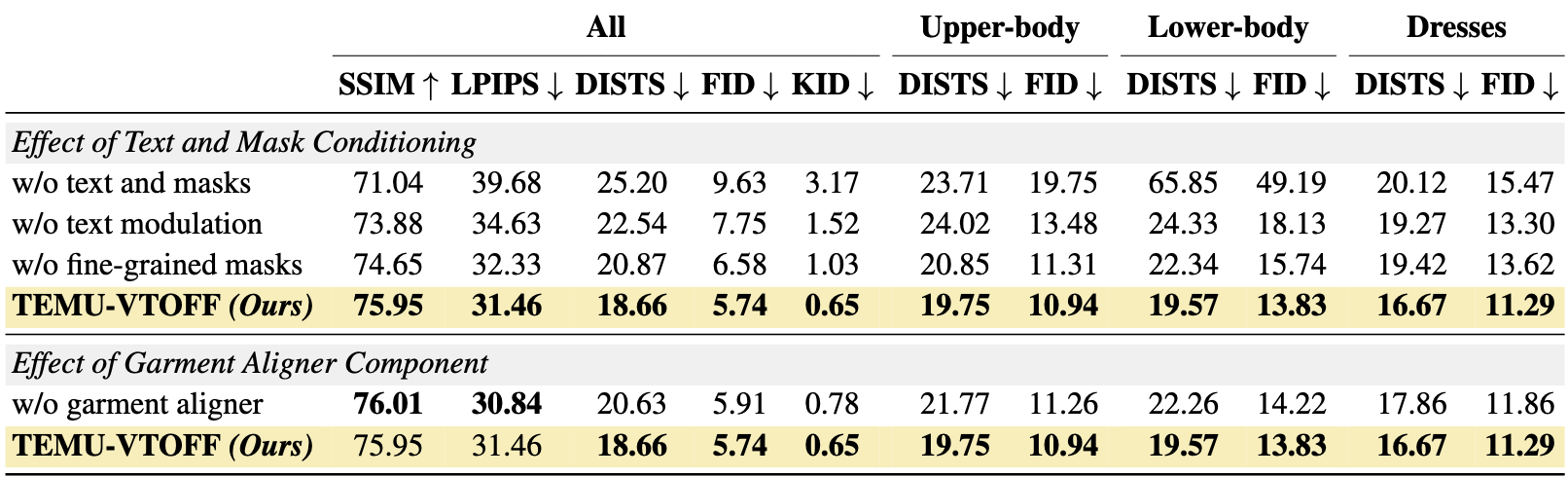

Ablation study of the proposed components on the Dress Code dataset.

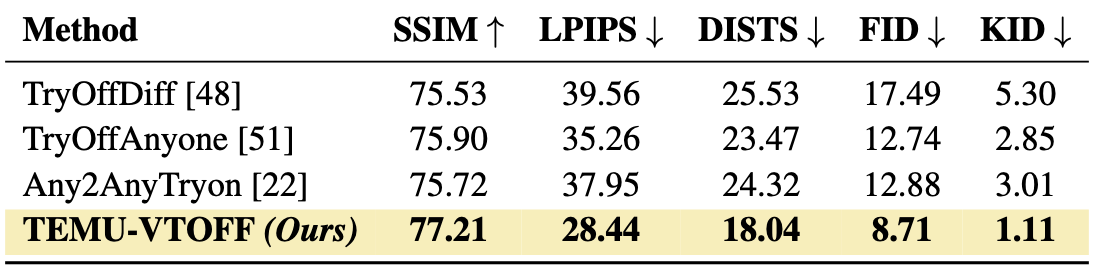

Quantitative results on the VITON-HD dataset.

@article{lobba2025inverse,

title={Inverse Virtual Try-On: Generating Multi-Category Product-Style Images from Clothed Individuals},

author={Lobba, Davide and Sanguigni, Fulvio and Ren, Bin and Cornia, Marcella and Cucchiara, Rita and Sebe, Nicu},

journal={arXiv preprint arXiv:2505.21062},

year={2025}

}